I have this test script I use to remind myself of the fastest way I’ve found to copy data into an Oracle table. It shows three things:

- Fast way to create a new table populating with data

- Fast way to create an index on a large table

- Fast way to insert a lot of data into a table

The essence of this is that you want everything done nologging, in parallel, and using direct path.

In this example we have two tables – source and target. We are copying data from source to target. Here is how we create a new table called target with all the data in source:

create table target parallel 4 nologging as select /*+parallel(source 4) */ * from source;

The parallel 4 makes the inserts into the target table be done with 4 parallel processes. Nologging prevents these changes from going to the redo logs for recovery. The hint – parallel (source 4) – causes the source table to be queried with four parallel processes. What makes this so fast is that both the select and insert are done in parallel. Also, in this “nologging create table as select” scenario the database uses direct path inserts which loads the blocks up in bulk rather than one row at a time.

Next I build an index on the target table:

create index target_idx on target (KEY1) parallel 4 nologging;

This is fast because again you are not logging to the redo logs and you are using 4 parallel processes.

Lastly, and most important this is how to insert a lot of data into an existing table very quickly:

ALTER SESSION ENABLE PARALLEL DML; insert into target select /*+parallel(source 4) */* from source;

This assumes your target table was created with parallel 4 just as in our previous example. The alter session command is essential because without it the database will not insert the data in parallel. If you do the alter session and the target table has parallel greater than 1 then your insert will be in parallel and it will use direct path load. But, you also have to make sure your query is done with equal parallelism. In this example the target table was parallel 4 so the parallel hint on the select makes the select parallel 4.

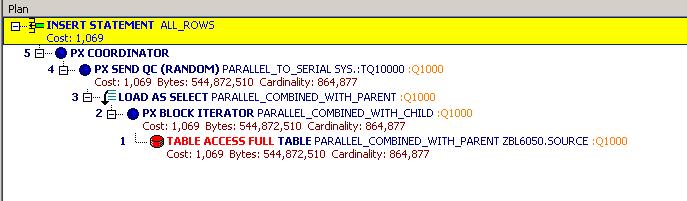

Here is the plan for the insert statement:

The key here is the LOAD AS SELECT which tells you it is using direct path inserts. Also, the PARALLEL_… on the LOAD tells you it is loading in parallel. Lastly the PX BLOCK ITERATOR tells you it is querying the table in parallel. You can’t have any of these missing or else you won’t have the fastest way to do the insert: parallel read and write and direct path insert.

– Bobby