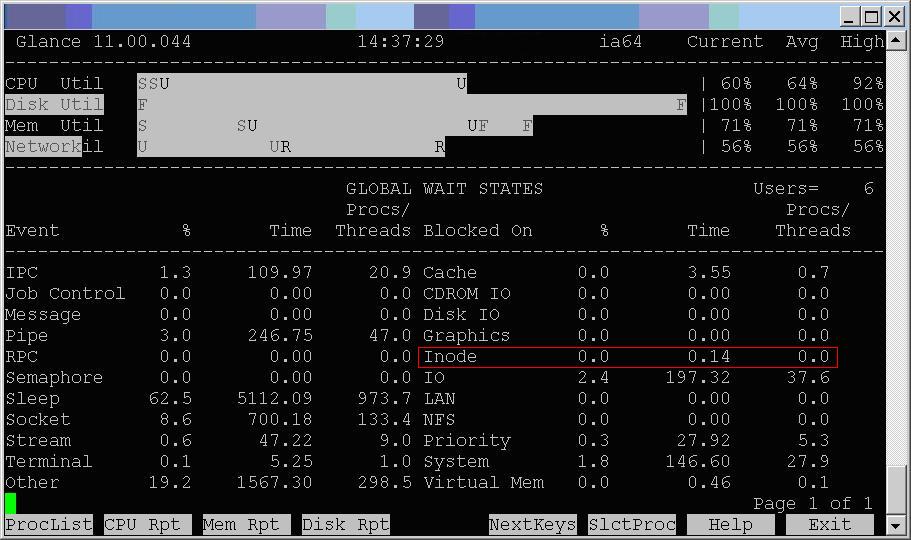

Hopefully this isn’t too redundant. Saw another performance issue today caused by a type mismatch between a bind variable and the column it was compared to in a SQL statement. I saw this in some real code and then built a simple testcase to prove out the concept: zip of testcase SQL and log.

The column being compared against was type VARCHAR2 and the bind variable was NUMBER. Converting the variable to a character type with TO_CHAR resolved the issue.

Table definition:

create table test (OWNER VARCHAR2(30), TABLE_NAME VARCHAR2(30), TEST_COLUMN VARCHAR2(2000)); create index testi on test(TEST_COLUMN);

PL/SQL variable of the wrong type (number):

declare V_NUMBER number; begin V_NUMBER := 1;

Execute immediate passing the bind variable that does not match the type of the column TEST_COLUMN:

execute immediate 'UPDATE TEST SET TABLE_NAME=''UPDATED'' WHERE TEST_COLUMN=:1' using V_NUMBER;

Note that I put in a carriage return so it would fit the blog width. It is one line in the script.

Execute immediate converting the variable to a character before passing it so it matches the column in the where clause:

execute immediate 'UPDATE TEST SET TABLE_NAME=''UPDATEDAGAIN'' WHERE TEST_COLUMN=:1' using to_char(V_NUMBER);

With the TO_CHAR the update is much faster:

No TO_CHAR: Elapsed: 00:00:01.45 With TO_CHAR: Elapsed: 00:00:00.04

Plan without the TO_CHAR is full scan:

SQL_ID 41vfab6v87w4g, child number 0

-------------------------------------

UPDATE TEST SET TABLE_NAME='UPDATED' WHERE TEST_COLUMN=:1

Plan hash value: 3859524075

---------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------

| 0 | UPDATE STATEMENT | | | | 2579 (100)| |

| 1 | UPDATE | TEST | | | | |

|* 2 | TABLE ACCESS FULL| TEST | 2 | 46 | 2579 (4)| 00:00:37 |

---------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - filter(TO_NUMBER("TEST_COLUMN")=:1)

Plan with the TO_CHAR is index range scan:

SQL_ID 3229aq5w36kst, child number 0

-------------------------------------

UPDATE TEST SET TABLE_NAME='UPDATEDAGAIN' WHERE TEST_COLUMN=:1

Plan hash value: 3736755925

---------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------

| 0 | UPDATE STATEMENT | | | | 5 (100)| |

| 1 | UPDATE | TEST | | | | |

|* 2 | INDEX RANGE SCAN| TESTI | 2 | 46 | 3 (0)| 00:00:01 |

---------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("TEST_COLUMN"=:1)

– Bobby