A few days or weeks ago I saw a Twitter post about database links failing on Oracle databases next year. So, I have researched this issue. My company has a bunch of Oracle databases with a web of database links connecting them. After researching this for a while I have convinced myself that our company will not experience an issue. I want to share some of what I have found in this post.

First, I have read some helpful documents on Oracle’s support site. I recommend that you review these if you want to understand the issue:

Mandatory Patching Requirement for Database Versions 11.2.0.3 or Earlier, Using DB Links (Doc ID 2335265.1)

Recommended patches and actions for Oracle databases versions 12.1.0.1, 11.2.0.3 and earlier – before June 2019 (Doc ID 2361478.1)

Master Note: Overview for SCN issues (Doc ID 1503937.1)

System Change Number (SCN), Headroom, Security and Patch Information (Doc ID 1376995.1)

ORA-19706 and Related Alert Log Messages (Doc ID 1393360.1)

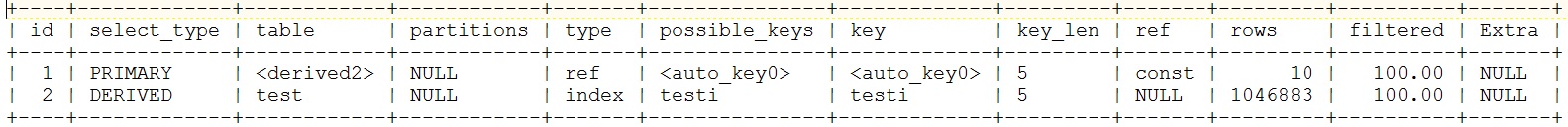

Bug 23130347 : HIGH SCN GROWTH RATE

Besides reading Oracle support documents I wanted to recreate a database link issue on a couple of test databases. My idea was to build a database that was not patched enough based on Oracle’s recommendations and one that was. Then connect them and set the date forward to after June 23, 2019 and see if I could create the issues that could happen at that time. I was not successful in recreating the issue. But I did prove out one interesting fact about database links and SCN numbers. When you use a database link the Oracle RDBMS synchronizes the SCN numbers of the two databases. Here is an example. I have 11.2.0.3.0 and 12.1.0.2 databases running on small Linux VMs on top of VirtualBox on my laptop.

Here are their SCN numbers after I create a database link between the two and before I use the database link:

NLSRTL Version 11.2.0.3.0 - Production

ORCL:SYSTEM>ORCL:SYSTEM>

CURRENT_SCN

----------------

1003971

NLSRTL Version 12.1.0.2.0 - Production

ORCL:SYSTEM>ORCL:SYSTEM>

CURRENT_SCN

----------------

1858602

Here are their SCN numbers after an update over a link:

NLSRTL Version 11.2.0.3.0

ORCL:SYSTEM>ORCL:SYSTEM>

CURRENT_SCN

----------------

1859090

NLSRTL Version 12.1.0.2.0

ORCL:SYSTEM>ORCL:SYSTEM>

CURRENT_SCN

----------------

1859091

I did the same thing with a select statement and got the same sort of synchronization of SCN numbers.

Knowing that database links cause SCNs to sync I started looking at our SCNs on all of our databases and found that there were two groups of databases with very similar SCNs. One group’s SCN was around 475,000,000,000 and the other group’s SCN was around 970,000,000,000.

But, based on the documents that I have read the max SCN for May 16th, 2018, today, is almost 16 trillion. We are under 1 trillion on our busiest system so we have a lot of headroom. The Oracle support documents say that there is some internal limit to how much your SCN can grow and Oracle bases the limit on the number of seconds since midnight January 1, 1988. You multiply the seconds by 16K SCN numbers per second to get the max SCN for today’s date. I found a lot of this math in scnhealthcheck.sql which Oracle’s Doc ID 1503937.1 includes. I hacked it into different pieces that I found helpful but I didn’t think I should post them because it is a copyrighted script. Just download it from Oracle support and see if what I’m saying makes sense.

Anyway, after reading up on all this stuff I don’t think that the SCN issues with database links apply to us. We have far too much headroom. Maybe a company with higher transaction rate databases would be closer to the limit. We will find out on June 23, 2019 if I am right.

Bobby