Monday, I used the coe_xfr_sql_profile.sql script from Oracle Support’s SQLT scripts to resolve a performance issue. I had to set the parameter force_match to TRUE so that the SQL Profile I created would apply to all SQL statements with the same FORCE_MATCHING_SIGNATURE value.

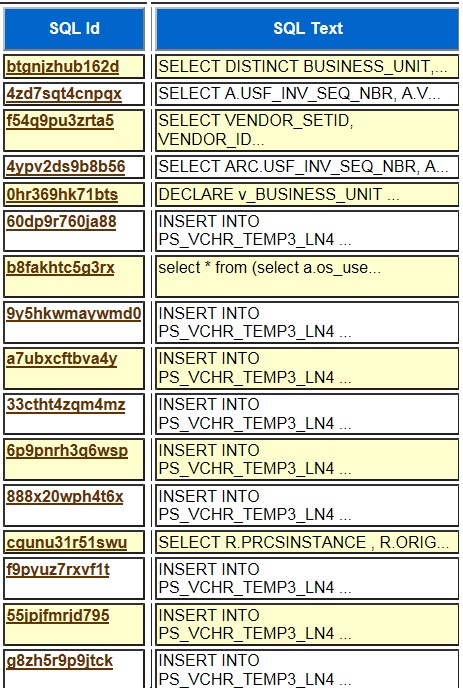

I just finished going off the on-call rotation at 8 am Monday and around 4 pm on Monday a coworker came up to me with a performance problem. A PeopleSoft Financials job was running longer than it normally did. Since it had run for several hours, I got an AWR report of the last hour and looked at the SQL ordered by Elapsed Time section and found a number of similar INSERT statements with different SQL_IDs.

The inserts were the same except for certain constant values. So, I used my fmsstat2.sql script with ss.sql_id = ’60dp9r760ja88′ to get the FORCE_MATCHING_SIGNATURE value for these inserts. Here is the output:

Now that I had the FORCE_MATCHING_SIGNATURE value 5442820596869317879 I reran fmsstat2.sql with ss.FORCE_MATCHING_SIGNATURE = 5442820596869317879 instead of ss.sql_id = ’60dp9r760ja88′ and got all of the insert statements and their PLAN_HASH_VALUE values. I needed these to use coe_xfr_sql_profile.sql to generate a script to create a SQL Profile to force a better plan onto the insert statements. Here is the beginning of the output of the fmsstat2.sql script:

The first few lines show the good plan that these inserts ran on earlier runs. The good plan has PLAN_HASH_VALUE 1314604389 and runs in about 600 milliseconds. The bad plan has PLAN_HASH_VALUE 3334601 and runs in 100 or so seconds. I took a look at the plans before doing the SQL Profile but did not really dig into why the plans changed. It was 4:30 pm or so and I was trying to get out the door since I was not on call and wanted to get home at a normal time and leave the problems to the on-call DBA. Here is the good plan:

Here is the bad plan:

Notice that in the bad plan the Rows column has 1 in it on many of the lines, but in the good plan it has larger numbers. Something about the statistics and the values in the where clause caused the optimizer to build the bad plan as if no rows would be accessed from these tables even though many rows would be accessed. So, it made a plan based on wrong information. But I had no time to dig further. I did ask my coworker if anything had changed about this job and nothing had.

So, I created a SQL Profile script by going to the utl subdirectory under sqlt where it was installed on the database server. I generated the script by running coe_xfr_sql_profile gwv75p0fyf9ys 1314604389. I edited the created script by the name coe_xfr_sql_profile_gwv75p0fyf9ys_1314604389.sql and changed the setting force_match=>FALSE to force_match=>TRUE and ran the script. The long running job finished shortly thereafter, and no new incidents have occurred in future runs.

The only thing that confuses me is that when I run fmsstat2.sql now with ss.FORCE_MATCHING_SIGNATURE = 5442820596869317879 I do not see any runs with the good plan. Maybe future runs of the job have a different FORCE_MATCHING_SIGNATURE and the SQL Profile only helped the one job. If that is true, the future runs may have had the correct statistics and run the good plan on their own. It could be that the INSERT statements are now running so fast that they are not recorded in the AWR as one of the top SQL statements.

I wanted to post this to give an example of using force_match=>TRUE with coe_xfr_sql_profile. I had an earlier post about this subject, but I thought another example could not hurt. I also wanted to show how I use fmsstat2.sql to find multiple SQL statements by their FORCE_MATCHING_SIGNATURE value. I realize that SQL Profiles are a kind of band aid rather than a solution to the real problem. But I got out of the door by 5 pm on Monday and did not get woken up in the middle of the night so sometimes a quick fix is what you need.

Bobby

Presumably, when there were good and bad plans the SQL statement was appearing in AWR top N and therefore both good and bad plans were captured.

Once it was “fixed”, it was no longer captured as top N.

FORCE_MATCHING_SIGNATURE would only change if the actual SQL statement had materially changed

I think you are right. I need to get in the habit of looking at V$ views after I apply a SQL Profile. I am so used to using my scripts that access the AWR views to show history that I forget about the V$ views that show recently executed SQL statements.

Nice example of using coe_xfr_sql_profile.sql to generate SQL profiles. However, this example comes from PeopleSoft, and relates to a statement from Application Engine that uses PeopleSoft three temporary records; PS_PST_VCHR_TAO4, PS_DIST_LINE_TMP4 and PS_VCHR_TEMP_LN4.

However, if you ran two concurrent instances of the same problem (or if this is a restartable AE anda previous instance crashed leaving the non-shared instances of the temporary records locked to its process instance) then you would use different temporary table instances, perhaps PS_PST_VCHR_TAO5, PS_DIST_LINE_TMP5 and PS_VCHR_TEMP_LN5. If the statement references different tables then it has adifferent SQL_ID and a different force matching signature, and you would need a different SQL Profile.

It is not impossible to see a single application engine use different instances of different temporary records. So you might conceivably see a statement reference PS_PST_VCHR_TAO4, PS_DIST_LINE_TMP5 and PS_VCHR_TEMP_LN6.

So you need at least a SQL Profile for every temporary table instance, and perhaps one for each permutation of possible instance of each table.

It gets worse. You started from instance 4 because by default the first three instances are reserved for on-line application engines, but if you changed that from 3 to say 5, then you would have started with PS_PST_VCHR_TAO6 etc. and so you would need addition SQL profiles.

If you need to allow for greater concurrency of this program, and so increase the number of temporary table instances for this Application Engine and create the additional temporary table instances, you would again need to create additional SQL profiles.

SQL profiles could become an administrative nightmare here. Get it wrong and you could get different SQL execution plans at different times.

In general, adding hints to application code is not good pratice. Not least because you need an application change and test cycle to change or remove them. However, you may feel that this may be a justifiable exception to the general rule and adding hints to the code might be the lesser evil.

Thanks for your comment David. At my company we apply SQL Profiles without application testing and change approval. We document the SQL Profile with a “standard change”. My reasoning is that we are forcing the SQL statement to run a plan that it had in the past so it has already been tested. Since we have used SQL Profiles on many databases to resolve many performance issues I feel like we have tested SQL Profiles as a technology. We have hundreds of databases and our budget is tight so it helps to have a band aid like SQL Profiles. I was unsure about applying a SQL Profile to a PeopleSoft SQL statement that uses PeopleSoft temporary tables for reasons like the ones you listed. But it did seem to resolve this issue. I feel the tension between creating a bunch of SQL Profiles and fixing the application and the stats gathering to resolve the issue without them. There are pros and cons. I can apply a SQL Profile by myself without anyone else’s help or approval and that makes things a lot faster in a large company.

Thanks again for commenting on this blog post.

Bobby

Does the DB param cursor sharing needs to be at a certain value for this sql profile to be picked up by same signature sql statements? Say exact, force etc.

Thanks for your comment. The force_match TRUE setting in a coe_xfr_sql_profile script does not need any particular setting for cursor sharing. The systems I work on have cursor_sharing=EXACT.

Bobby